Detecting Cause-Effect Relations in Natural Language

Anyone who has used a recent version of Specmate might has already seen an amazing new feature. In the overview screen of any Cause-Effect-Graph is now an anoccurus little button titled “Generate Model”. Clicking this button will trigger a chain of systems, that is capable of using natural language processing to turn a sentence like

If the user has no login, and login is needed, or an error is detected, a warning window is shown and a signal is emitted.

directly into a CEG, without any additional work from the user:

In this article we will do a deep dive into this feature, have a look at the natural language processing (NLP) pipeline required to make such a system work, see different approaches to implement it, and figure out how to garner the power of natural language processing for our goals.

Modeling Requirements

Specmate uses so called Cause-Effect-Graphs (CEGs) in order to model requirements. A CEG is a simple directed graph, that shows how different components and events are causally related. Take for example the following requirement:

If the system detects a missing or invalid password, it should log an error and display a warning window.

In a CEG we would model this in the following way: We have two causes:

“The system detects a missing password” and “The system detects an invalid password”

These two are causally related to the two effects:

“The system displays a warning window” and “The system logs an error”

With them being true if at least one of the causes being fulfilled. In Specmate this model looks like this:

These models make it not only simpler to grasp the structure of the requirement, they also allow us to do further computations on them. Specmate uses CEG models to automatically generate tests for the requirement.

The Language of Cause and Effect

While having requirements in a structured form like CEG definitely has many advantages, creating many CEG models can be time consuming. Since very often the requirement is available in other formats (often plain text) the idea to automate this process comes quite naturally.

This was the idea behind the aforementioned feature: Given a requirement described in natural language (specmate currently supports requirements in English or German), generate an appropriate model.

The first approach: A (Syntax-) Rule Based System

It might be surprising to some that our first approach to this problem was a rule based system. While using deep learning is currently very much en vouge in the field of natural language processing, we wanted to start with a simpler approach that would work even when big amounts of training data weren’t available, like it was in this case.

A rule based system is often a solid choice as a first try. Since in many cases a big chunk of the problem can be solved with only a handful of carefully crafted rules. In the case of cause effect detection, we are using a total of 33 patterns to detect causal relationships. For example we have three patterns to detect “if-then” constructs:

If [CAUSE], [EFFECT]

[EFFECT] if [CAUSE]

If [CAUSE] then [EFFECT]

Notice that these patterns are completely syntactical. There is no fancy NLP preprocessing going on. A simple version of the patterns could be implemented using nothing but regular expressions. Then one can further process the detected causes and effects, by further applying rules that take care of conjunctions (like “and”, “or”, “neither … nor … ”, etc.). Lastly an additional process takes over and takes the – now splitted – sentence and generates the nodes for the model from the fragments.

Let us look at the example from the top.

If the system detects a missing or invalid password, it should log an error and display a warning window.

We can apply the first rule and get the cause

the system detects a missing or invalid password

and the effect

it should log an error and display a warning window.

We then split the sentence at the “and” and the “or” so we get two causes:

the system detects a missing

invalid password

and the two effects

it should log an error

display a warning window

From this we can generate the model above, but it requires some additional processing, as we will see later. These kinds of systems are quite intuitive and yet the results are very robust and the generated models are of high quality. All of this makes rule based systems a good testing baseline for any other systems down the line. In the case of Specmate the rule based system is still used as a backup method in case any of the more “fancy” methods fail.

The second approach: Natural Language Processing and Dependency Patterns

While a lot can be said in favor of a simple rule based systems, there are downsides that made us steer away from them.

The first problem with the previously described system is that it is quite rigid. Any changes and improvements we wanted to implement meant that we had to dig through a lot of regular expressions and string parsing infrastructure. So the first goal was to make the system more flexible.

The second problem was that many formulations required multiple rules, despite being semantically exactly the same. Take for example the following two sentences:

If the tool detects an error, the tool shows a warning window.

The tool shows a warning window, if the tool detects an error.

While these two sentences only differed in their word order they required two different rules. And this is where natural language processing came into play. For the model generation in Specmate we are using a dependency parser.

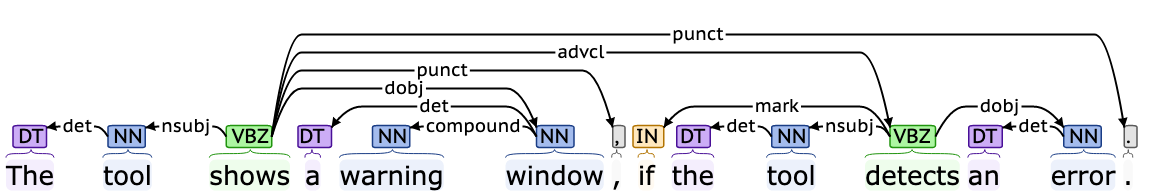

To explain dependency parses for the uninitiated: A dependency parse tries to describe the structure of a sentence in terms of binary relationships between the words. What is nice about dependency parses, is that they are independent of the word order.

So let’s take our example sentences and feed them into the corenlp dependency parser:

As one can see is that while the order of the words is different, the relationships between them has stayed the same. There is still the same adverbial clause (advcl) relation between “shows” and “detects”, still the same compound relation between “window” and “warning”, and so on.

So let’s go though the phrase “The tool shows a warning window”. Starting at the word “shows” (it is the root of the dependency parsetree, since it has no incoming edges).

The dependency parser has correctly identified the verb has the subject phrase (nsubj) “the tool” and the object phrase (dobj) “a warning window”. Moreover it also found the determiners (det) “the” and “a” of the noun phrases “tool” and “warning window” and lastly it correctly identified the fact that “warning window” is a compound noun phrase.

The utility of dependency parses come from the fact that they are independent of word order. Both sentences have the same arcs. Most notably for our purpose is the adverbial clause arc (advcl) since it connects the effect with the cause.

Now consider the following sentence:

When the tool detects an error, the tool shows a warning window.

This again would be a different rule in our old system, but if we look at the dependency parse, we see again, that the adverbial clause arc is still there:

The only change we can see is that the “If” is now a “When” and the arc from detects is now a adverbial modifier (advmod) and not a marker (mark).

Let’s ignore the “advcl” arc and focus on the rest. If we look at every word with a causal dependency to “detects” we get “When the tool detects an error”. If we do the same for “shows” we get “the tool shows a warning window” these two phrases are exactly our cause and effect phrases. Additionally removing the when we get pretty much all the information to fill in a CEG Diagram.

To formalize this idea we wrote a simple language that allows us to write rules, that transform the dependency parse and filter the subtrees. The rule that would match the first examples would look like this:

[EFFECT] – advcl -> [CAUSE] – mark -> IN:’if’

This simply states the observations we have made. We essentially create patterns to match against the dependency parse. This becomes a lot more interesting, when we introduce a second rule:

[PART_A] – conj -> [PART_B]

[PART_A] – cc -> CC:’or’

This rule is similar to our first rule only that instead of matching cause and effect, this rule encapsulates the structure of a disjunction (“Option A” OR “Option B”).

Consider this example sentence:

If the user enters the wrong username, or the user enters the wrong password , the tool shows a warning window.

The parser produces the following dependency parse:

This might look a little more difficult so let’s break it down step by step:

We start at “shows” since it is the root of the parsetree (we know this since it has no incoming dependencies).

Then we apply our first rule:

The subtree below “shows” becomes the effect and the subtree below “enters” becomes the cause. Since the “if” was not specified by the rule to belong to either tree it is ignored (we don’t need the “if” anymore since the rule already told us that it is a cause/effect relationship).

So now we have split our original parsetree into two smaller trees. Now we apply the next rule on those. We can see that our “or rule” matches on the cause:

So we get “the user enters the wrong username” and “the user enters the wrong password” as the two parts of our disjunction. All of this tells us, that we have two causes, that are combined in an “or relation”. This is also the structure of our final Cause-Effect-Graph. The rules also directly split the input into the subphrases that we can put into the nodes of the graph.

In Specmate we have a list of these rules for many more of those relationships. Additional rules for cause and effect, rules for constructs like “and”, “neither… nor”, “either… or”, “not”, etc. Then we have a second system that takes the parse provided by the rules and generates the model.

Limitations:

Currently there are limits to this approach: The quality of the system hinges directly on the quality of the dependency parse, so if the parser is poorly trained, the rules won’t be able to find the patterns we have described. There are two workarounds we can use in these situations:

One is to improve the parser, by feeding it more and better training data, the other is to add additional rules that can handle suboptimal parses. Since writing a new rule is now a matter of mere seconds, adding new rules is a viable approach.

Another problem we had since the first approach was the fact, that simply splitting the sentence my not be enough for all problems. Consider the sentence:

If the tool detects an invalid or missing password, it shows a warning window.

If we were to simply split at the “or” we would run into the problem that we are short a noun and a verb. Neither “the tool detects an invalid” nor “missing password” are useful phrases.

So we need to “repair” those phrases. In our case this was done as a preprocessing step:

The preprocessing pipeline

Specmates NLP preprocessing pipeline has the task of taking whatever text the user has entered and turning it into sentences the generator can deal with. It works by iteratively improving the sentence. Each of the steps of the pipeline is its own little subsystem, that focuses on fixing a single aspect of the sentence:

Input: If the tool detects an invalid or missing password, it shows a warning window.

Step 1: Complete Noun Phrases

The first step looks for adjective conjunction, and adds missing nouns, in order to rephrase the sentence using conjunctions of noun phrases:

Result: If the tool detects an invalid password or missing password, it shows a warning window.

Step 2: Insert missing predicates

Now that we have conjunctions of noun phrases we add their respective predicate, such that we now have conjunctions of complete sentences. For ease of reading I added some determiners, but for the purpose of the pipeline they are not needed.

Result: If the tool detects an invalid password or detects [a] missing password, it shows a warning window.

Step 3: Insert missing Subjects

Sometimes we face the opposite problem as in the previous step: Instead of having a noun without a verb, we might have a verb without noun. The last step of the preprocessing adds those subjects, so that we are left with easy-to-parse sentences.

Result: If the tool detects an invalid password or [the] tool detects [a] missing password, it shows a warning window.

And now we have a sentence our rules can perfectly deal with.

Further Improvements

There are even more possible steps one could implement. Take for example coreference resolution. When we run the parse through the coreNLP coreference resolver we get the following result:

This would allow us to replace the very unspecific “it” with the more specific “the tool”, thereby producing an even better parse:

If the tool detects an invalid password or [the] tool detects [a] missing password, [the] tool shows a warning window.

There are many more possible improvements one could add to make the results even better. For example we could have detection of cause-effect relations spanning more than one sentence. Like in the sentence

It is cold. Therefore it snows.

Additionally there can always be more patterns for implicit causality, but here we are getting to the final and probably most important point of the idea of generating models from plain text:

The quality of the model is directly dependent on the quality of the input.

Sure, we can use many different tools to improve our model generating technique and we will keep doing this, but this in no way replaces formulating good requirements. Even with all these improvements we discussed Specmate won’t be able to parse a page-long sentence with subclauses and no regards for punctuation, but neither will a human engineer.

That being said, Specmate should now be capable of dealing with anything within reason.

Sorry, the comment form is closed at this time.

Pingback: Into NLP 6 ~ New Link Project - Dependency Parser - Qualicen

17/12/2021